Touchscreen technology has become so ubiquitous in modern life that it’s difficult to imagine a time when human-computer interaction required keyboards, mice, and indirect manipulation. Today, billions of people worldwide interact with touchscreens daily through smartphones, tablets, interactive kiosks, ATMs, and institutional displays. Yet this seemingly modern technology actually traces its origins back nearly six decades to pioneering research in the 1960s.

The journey from early experimental touch interfaces to today’s sophisticated multi-touch displays represents one of technology’s most transformative evolutions. Understanding this history provides essential context for appreciating how far touchscreen technology has advanced and where it continues heading as new applications emerge across educational institutions, museums, corporate facilities, and public spaces worldwide.

This comprehensive guide explores the complete history of touchscreen technology—from the first finger-driven interfaces developed in 1965 through the smartphone revolution and modern institutional applications. You’ll discover the key inventors who pioneered different touch technologies, understand how various detection methods evolved, and learn how touchscreens transformed from laboratory curiosities into essential tools reshaping human interaction with digital information.

The evolution of touchscreen technology fundamentally changed expectations about human-computer interaction. What began as specialized industrial applications gradually expanded into consumer electronics, eventually becoming the dominant interface paradigm through which most people now access digital content, navigate information systems, and interact with organizational recognition displays.

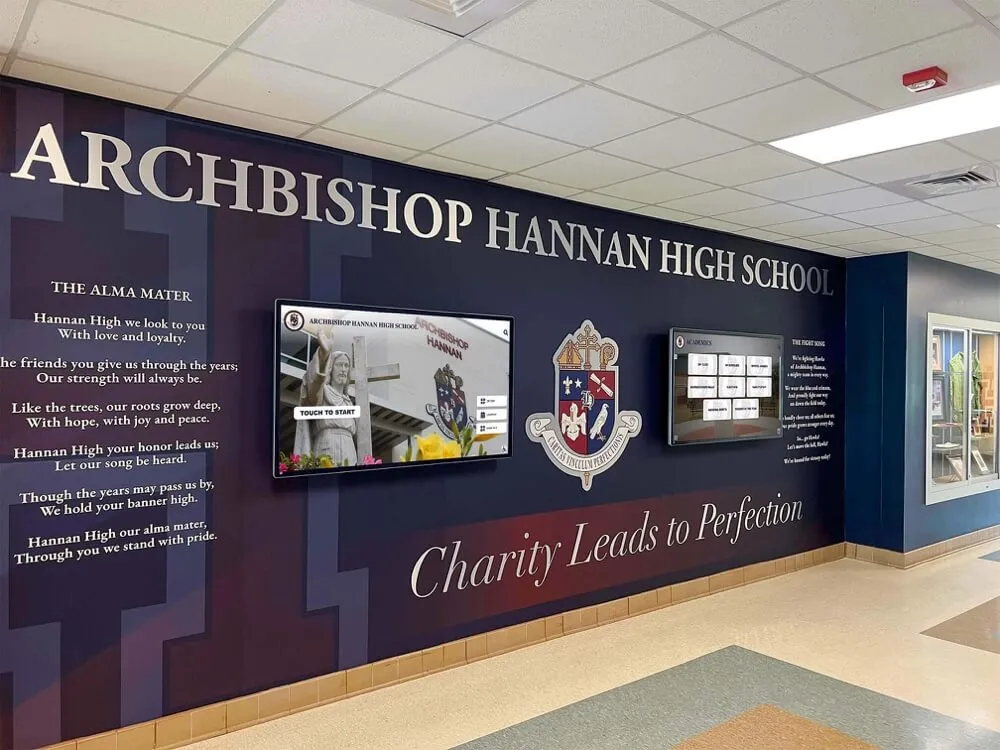

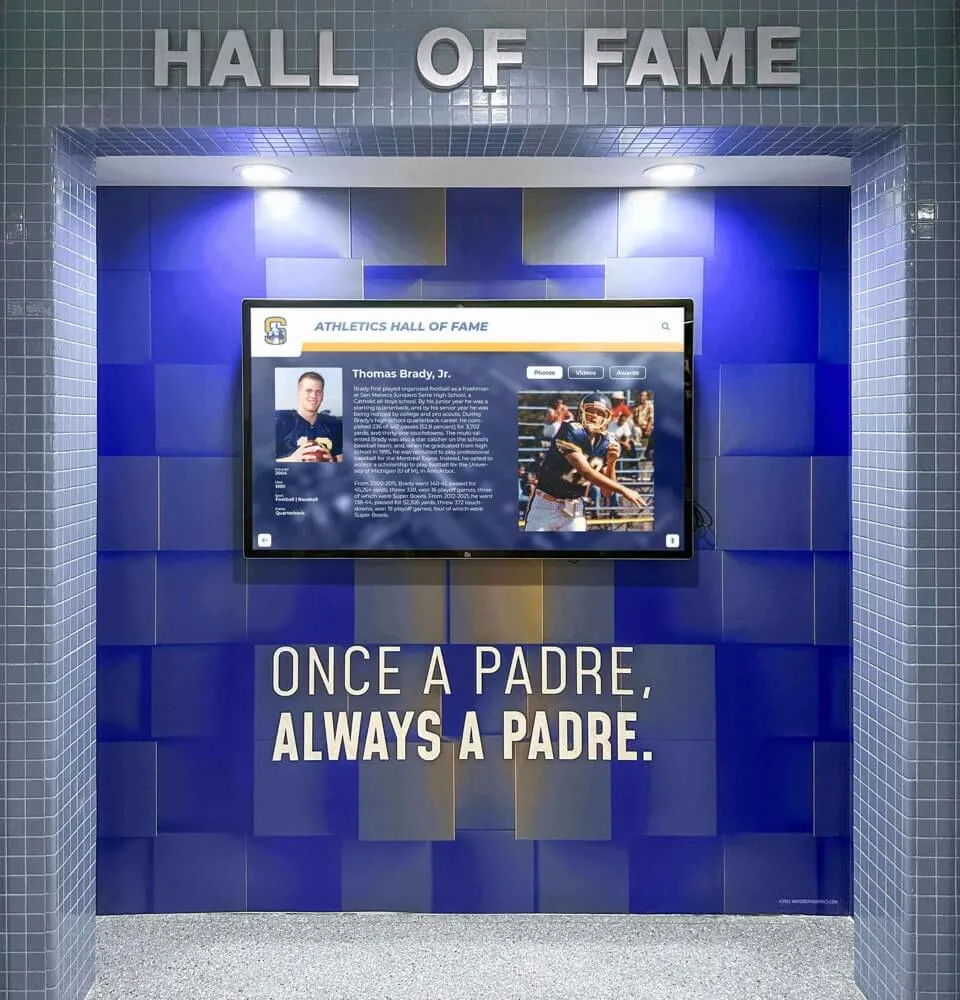

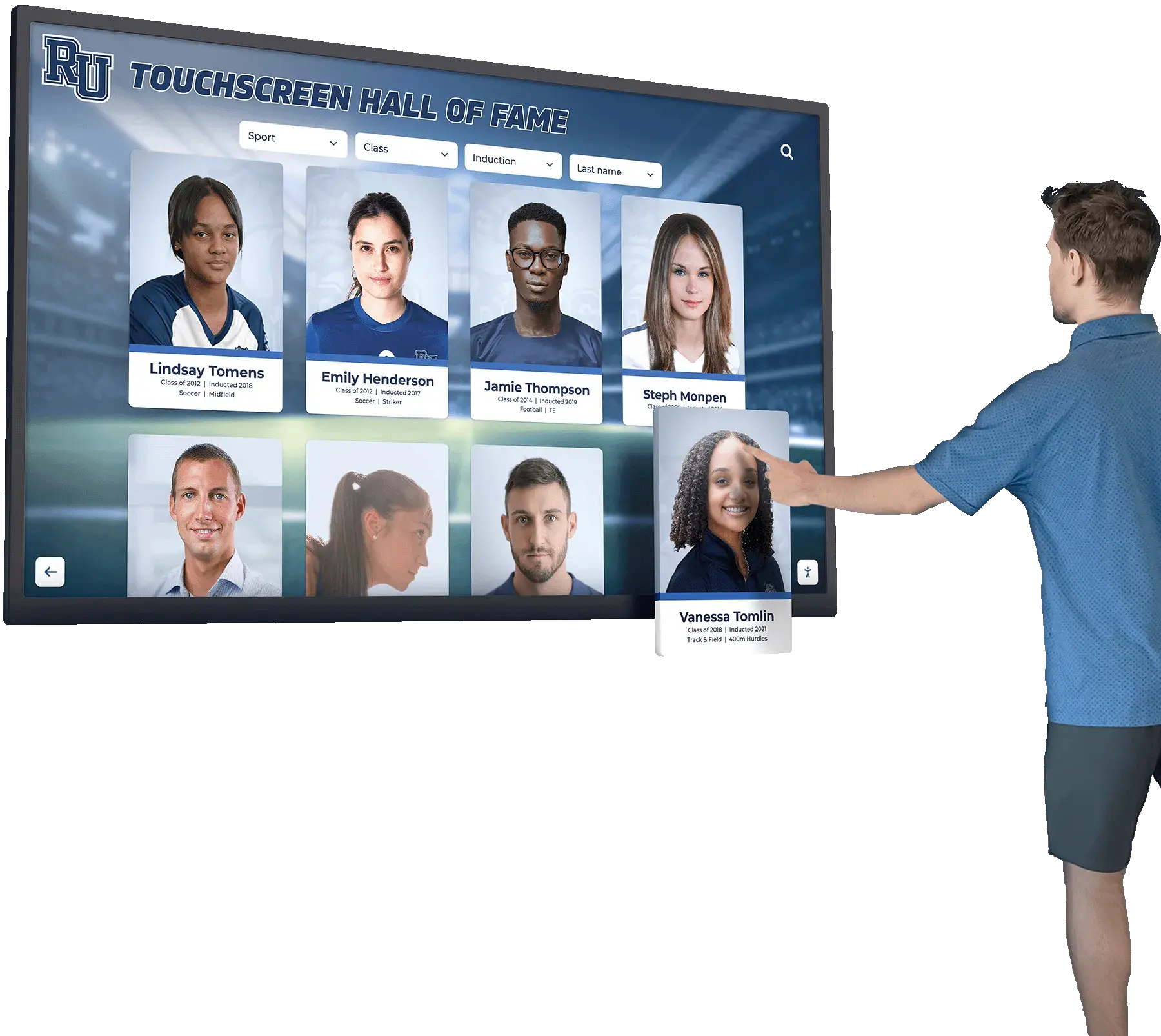

Modern touchscreen displays enable intuitive interaction through direct manipulation, fundamentally changing how people engage with digital content

The Early Foundations: Pre-Touch Interface Technology (1940s-1960s)

Before touchscreen technology emerged, researchers explored alternative input methods beyond keyboards that might create more natural human-computer interaction.

Electronic Music and Touch Sensitivity

The conceptual foundations for touch-sensitive technology appeared surprisingly early in electronic music applications. In 1948, Canadian physicist Hugh Le Caine developed the Electronic Sackbut, an early synthesizer featuring touch-sensitive keys that responded to pressure variations. While not a touchscreen by modern definitions, this instrument demonstrated that electronic systems could detect and respond to subtle variations in human touch—a foundational principle for later touchscreen development.

Le Caine’s work at the National Research Council of Canada established that electronic circuits could sense pressure differences and translate physical touch into electronic signals controlling sound parameters. This proof of concept influenced subsequent researchers exploring direct manipulation interfaces for computer systems.

Light Pen Technology and Direct Manipulation

During the 1950s and early 1960s, researchers developed light pen technology enabling users to point directly at computer displays rather than using indirect input devices. The SAGE (Semi-Automatic Ground Environment) air defense system, deployed in 1955, featured light pen interfaces allowing operators to touch screens to identify aircraft positions.

Light pens worked by detecting screen refresh timing when operators pointed them at specific display locations. While requiring physical styluses rather than finger touch, light pens established the direct manipulation paradigm where users interacted by pointing at desired screen elements—conceptually anticipating touchscreen interfaces.

The MIT Lincoln Laboratory’s TX-2 computer, completed in 1958, advanced light pen applications substantially. Ivan Sutherland’s revolutionary Sketchpad program, demonstrated in 1963, used light pen interaction to enable direct drawing and manipulation of graphical objects on computer displays. Sketchpad proved that direct screen manipulation created more intuitive interaction than keyboard-only interfaces for visual tasks.

The Birth of Touchscreen Technology (1965-1970)

The first true touchscreen technology emerged in the mid-1960s through pioneering work by British engineer E.A. Johnson at the Royal Radar Establishment in Malvern, England.

E.A. Johnson and the First Capacitive Touchscreen

Eric Arthur Johnson, while working on improving air traffic control interfaces, published the first formal description of touchscreen technology in an article titled “Touch Display - A Novel Input/Output Device for Computers” in the November 1965 issue of Electronics Letters. Johnson’s innovation used capacitive technology detecting the electrical properties of human fingertips to sense touch locations on display surfaces.

Johnson developed his touchscreen system specifically to address limitations in air traffic control systems where operators needed to rapidly identify and track aircraft positions. Traditional input methods proved too slow and indirect for real-time tracking applications. His capacitive touchscreen enabled controllers to touch aircraft symbols directly on radar displays, dramatically improving response times and reducing operator errors.

The technology worked by placing a transparent capacitive sensor overlay on cathode ray tube (CRT) displays. When operators touched the screen surface with their fingers, the electrical properties of human skin altered the capacitive field at the touch location. Electronic circuits detected these capacitance changes and calculated touch coordinates, transmitting position data to the computer system.

Modern capacitive touchscreens trace their lineage directly to Johnson's pioneering 1965 air traffic control interface

Johnson’s work represented genuine innovation rather than incremental improvement. Before his development, no systems existed enabling computers to detect and respond to finger touches on display surfaces. His published research included detailed circuit diagrams and technical specifications enabling other researchers to replicate and extend the technology.

The British air traffic control system deployed Johnson’s touchscreen technology operationally in 1967, representing the world’s first practical touchscreen application. Controllers used the system successfully for several years, validating touchscreen viability for critical real-time applications where interface speed and directness provided measurable operational advantages.

Expansion and Refinement of Capacitive Technology

Johnson continued developing touchscreen technology throughout the late 1960s. In a 1968 paper titled “Touch Displays: A Programmed Man-Machine Interface,” he described improvements enabling multi-dimensional touch sensing and more precise coordinate detection. He received the U.S. patent for his touchscreen technology in 1969 (U.S. Patent 3,482,241), formally establishing intellectual property protection for capacitive touch detection methods.

Johnson’s capacitive approach created the foundation for modern smartphone and tablet touchscreens. While his original implementation used analog electronics and required substantial supporting circuitry, the core principles—detecting capacitance changes caused by conductive objects touching sensor surfaces—remain fundamental to contemporary capacitive touchscreen technology.

CERN and Alternative Capacitive Approaches

Parallel touchscreen development occurred at CERN (European Organization for Nuclear Research) in Geneva during the early 1970s. Engineers Frank Beck and Bent Stumpe developed a transparent capacitive touchscreen for controlling their SPS (Super Proton Synchrotron) accelerator particle beam parameters. Their system, completed in 1973, used a different capacitive sensing approach than Johnson’s but achieved similar touch detection capabilities.

Beck and Stumpe’s design featured transparent conductive layers creating coordinate grids. When operators touched screens, their fingers’ electrical properties altered capacitance at specific grid intersections, enabling position detection. They published their technical approach in a 1973 paper, contributing to growing academic and engineering literature around touchscreen possibilities.

The CERN team focused on industrial control applications rather than air traffic control, demonstrating touchscreen versatility across different operational domains. Their work proved that touch interfaces could simplify complex control tasks requiring operators to adjust multiple parameters quickly while monitoring real-time system states.

Resistive Technology and Commercial Development (1970s)

While capacitive touchscreens showed promise for specialized applications, resistive technology emerged during the 1970s as an alternative approach offering different advantages particularly suited to commercial development.

Dr. Sam Hurst and the Birth of Resistive Touchscreens

Dr. G. Samuel Hurst, a physicist at Oak Ridge National Laboratory in Tennessee, developed resistive touchscreen technology beginning in 1971. Hurst’s invention emerged from practical frustration—he needed efficient methods for reading large volumes of data from bubble chamber photographs in particle physics research. Traditional digitizing methods proved tediously slow for the massive data sets his research generated.

Hurst developed the “Elograph” (electronic graph), a touch-sensitive panel using resistive technology to detect touch positions. His initial system used an opaque resistive panel with voltage gradients in perpendicular directions. When users pressed the panel surface with a stylus, electrical contact occurred at the touch point, creating measurable voltage changes that electronics could translate into coordinate positions.

The resistive approach offered several advantages over early capacitive systems. Resistive screens responded to any touch input—fingers, styluses, gloved hands, or any object applying pressure—whereas capacitive screens required conductive materials. This versatility made resistive technology appealing for industrial, medical, and outdoor applications where users might wear gloves or need stylus precision.

Transparent Resistive Screens and Commercial Viability

Hurst’s early opaque resistive panels worked effectively for digitizing applications but weren’t suitable for computer display overlays. Recognizing commercial potential, Hurst and his colleagues at Oak Ridge National Laboratory worked to develop transparent resistive touchscreens that could overlay video displays without obscuring viewed content.

In 1974, Hurst and his team successfully created the first transparent resistive touchscreen, receiving a patent in 1977 (U.S. Patent 4,071,689). This breakthrough transformed touchscreens from specialized data entry tools into practical interfaces for interactive computer systems. The transparent design used thin flexible films coated with transparent conductive materials like indium tin oxide (ITO). When users touched the top flexible layer, it made electrical contact with the bottom rigid layer at the touch point, enabling position detection through voltage measurements.

Hurst founded Elographics (later renamed Elo TouchSystems, now simply Elo) in 1977 to commercialize resistive touchscreen technology. Elo became the dominant touchscreen manufacturer for decades, supplying screens for industrial controls, point-of-sale terminals, ATMs, and information kiosks throughout the 1980s and 1990s.

Public Debut at the 1982 World’s Fair

Resistive touchscreen technology received major public exposure at the 1982 World’s Fair in Knoxville, Tennessee, where Elographics demonstrated interactive information kiosks featuring transparent resistive touchscreens. Fair visitors could touch screen maps to find pavilions, restaurants, and attractions, experiencing one of the first widely-accessible public touchscreen applications.

The World’s Fair demonstration proved touchscreen viability for consumer-facing applications beyond industrial controls. Visitors with no technical training successfully navigated touchscreen interfaces intuitively, validating that direct manipulation required minimal instruction compared to keyboard and mouse interfaces that many consumers found intimidating during personal computer’s early years.

Educational institutions became early adopters of touchscreen technology for student-facing information systems and recognition displays

Infrared and Acoustic Technology Alternatives (1970s-1980s)

Beyond capacitive and resistive approaches, researchers developed alternative touchscreen technologies using infrared light beams and acoustic surface waves during the 1970s and 1980s.

Infrared Touch Technology

Infrared touchscreens used LED emitters and photodetectors arranged around display perimeters, creating grids of invisible infrared light beams across screen surfaces. When users touched screens, their fingers interrupted infrared beams at specific coordinates, enabling position detection by identifying which beams were blocked.

The University of Illinois developed one prominent infrared touch system for their PLATO IV educational computer terminals beginning in 1972. PLATO (Programmed Logic for Automatic Teaching Operations) represented one of the first large-scale computer-based education systems, eventually serving thousands of students across multiple institutions. The infrared touch panels enabled students to select answers, navigate lesson content, and interact with educational software without keyboards.

PLATO’s infrared touchscreens gained visibility as the system expanded during the 1970s, introducing many students and educators to touch interface concepts years before consumer touchscreen devices emerged. The infrared approach offered advantages including durability (no physical overlay to wear out), high optical clarity (no layers between users and displays), and immunity to surface contaminants that could interfere with capacitive or resistive sensing.

Hewlett-Packard deployed infrared touchscreen technology in the HP-150 personal computer introduced in 1983. The HP-150 was marketed as “touch sensitive,” featuring infrared sensors surrounding the CRT display. While innovative, the HP-150’s infrared system required precise beam alignment and suffered from reliability issues as infrared emitters and detectors accumulated dust or fell out of calibration. The computer failed to achieve significant market success, though it demonstrated consumer interest in touch interfaces.

Acoustic Surface Wave Technology

Acoustic (or surface acoustic wave - SAW) touchscreens, developed during the 1980s, used ultrasonic waves traveling across glass panel surfaces. Transducers at screen corners generated acoustic waves that reflectors distributed across screen surfaces. When users touched screens, their fingers absorbed acoustic energy at touch points. Receiving transducers detected these acoustic disruptions, enabling coordinate calculation.

SAW technology offered excellent optical clarity since no coatings or overlays obscured displays. The glass surface proved highly durable and resistant to scratching. However, SAW screens required clean surfaces—liquids, contaminants, or adhering materials could block acoustic waves, creating “phantom touches” or dead spots. This environmental sensitivity limited SAW deployment primarily to indoor applications in controlled environments.

Despite limitations, SAW touchscreens found success in specialized applications including point-of-sale terminals, industrial control panels, and interactive kiosks where their optical clarity and surface durability outweighed environmental sensitivity concerns. Some manufacturers continue producing SAW touchscreens for specific niche applications, though capacitive technology has largely displaced SAW in most modern deployments.

Personal Computing Integration (1980s-1990s)

The 1980s and 1990s saw increasing efforts to integrate touchscreen interfaces into personal computers, though mainstream adoption remained elusive until later mobile device success validated touch interaction paradigms.

Early Touchscreen Personal Computers

Beyond the HP-150, several manufacturers attempted touchscreen-equipped personal computers during the 1980s. The Convergent Technologies WorkSlate, introduced in 1983, featured a portable form factor with stylus-based resistive touchscreen. Marketed toward mobile professionals, the WorkSlate ran custom software optimized for pen input but failed to gain significant market share against laptop computers with traditional keyboards.

In 1987, Atari Corporation released the Atari STacy, a portable computer combining the Atari ST desktop computer with a flip-up LCD screen and optional digitizer tablet. While not technically a touchscreen, the STacy represented growing interest in direct screen interaction for portable computing applications.

These early attempts generally failed because operating systems and application software remained designed for keyboard and mouse interaction. Touchscreen support required custom software that limited available applications, while retrofitting touch onto keyboard-optimized interfaces created awkward hybrid experiences that pleased neither traditional users nor those seeking pure touch interaction.

The IBM Simon: First Touchscreen Smartphone

IBM and BellSouth jointly developed the IBM Simon Personal Communicator, released in 1994 and widely recognized as the world’s first smartphone. The Simon featured a monochrome touchscreen enabling stylus-based interaction for phone dialing, contact management, note-taking, and even email access. Though bulky by modern standards and commercially unsuccessful (selling only 50,000 units during its brief market life), the Simon demonstrated that touchscreen interfaces could unify communication and computing in handheld devices.

The Simon’s resistive touchscreen required stylus input rather than finger touch, reflecting prevailing assumptions that handheld device screens were too small for accurate finger-based interaction. This stylus-centric design philosophy would dominate mobile touchscreen devices through the early 2000s with Windows Mobile and Palm OS devices all emphasizing stylus input over direct finger touch.

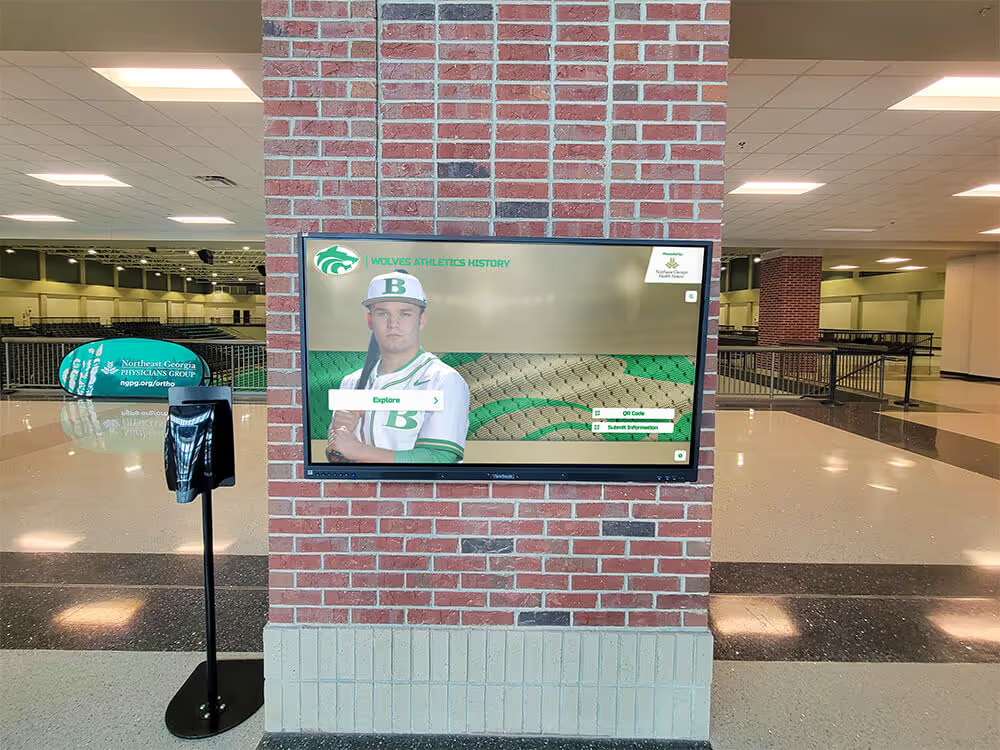

Public kiosks and institutional displays drove touchscreen adoption before consumer devices popularized the technology

Commercial Kiosk Expansion

While touchscreen personal computers struggled for acceptance, specialized applications flourished throughout the 1980s and 1990s. ATMs (automated teller machines) increasingly adopted touchscreen interfaces simplifying banking transactions for consumers unfamiliar with computer interfaces. Retail point-of-sale terminals used touchscreens enabling faster order entry compared to traditional cash registers.

Museums, airports, shopping malls, and tourist attractions deployed information kiosks featuring touchscreen wayfinding and directory applications. These public-facing deployments demonstrated touchscreen advantages for users requiring instant productivity without training. Unlike personal computers where users amortized learning curves across extended usage periods, kiosk visitors needed immediate intuitive interaction—a requirement touchscreens satisfied better than keyboard and mouse alternatives.

Organizations implementing interactive touchscreen displays for institutional recognition found that visitors naturally understood direct manipulation without instruction, creating engagement levels impossible with traditional static displays or keyboard-driven information systems.

Multi-Touch Technology Development (1980s-1990s)

While single-touch screens dominated commercial applications, researchers explored multi-touch technology enabling detection of multiple simultaneous touch points—capabilities essential for modern gesture-based interaction.

Early Multi-Touch Research

Nimish Mehta at the University of Toronto developed one of the first true multi-touch systems in 1982 as part of his master’s thesis work. Mehta’s “Flexible Machine Interface” used a frosted glass panel with a camera underneath. When users touched the panel, their fingers appeared as dark spots captured by the camera. Computer vision software identified touch locations and tracked individual fingers, enabling simultaneous multi-point input.

Mehta’s system represented proof-of-concept rather than practical commercial technology—the camera-based approach required substantial space beneath displays and processing power for real-time image analysis. However, his research demonstrated multi-touch feasibility and inspired subsequent development efforts.

At Bell Labs in 1984, Bob Boie developed an advanced multi-touch capacitive sensor capable of detecting multiple contact points and measuring contact pressure. His design used a grid of capacitive sensors creating two-dimensional touch position maps. While technically sophisticated, the system required expensive electronics limiting commercial deployment. Bell Labs’ work established important technical foundations for later multi-touch capacitive screens.

University of Toronto Input Research Group

The University of Toronto’s Input Research Group, led by Professor Bill Buxton, conducted extensive multi-touch research throughout the 1980s and 1990s. The group explored not just multi-touch detection but also interaction techniques leveraging multiple points of contact for zooming, rotating, and manipulating on-screen objects through two-handed gestures.

This research established interaction vocabularies and design patterns that would later appear in commercial multi-touch products. Pinch-to-zoom gestures, rotation through two-finger twisting, and two-handed manipulation techniques all appeared in academic papers from Toronto and other research institutions years before commercial implementation.

FingerWorks and Multi-Touch Gesture Recognition

FingerWorks, founded in 1998 by University of Delaware professors John Elias and Wayne Westerman, commercialized sophisticated multi-touch gesture recognition technology. Their products included multi-touch trackpads and keyboards recognizing complex gestures like pinching, spreading, and multi-finger scrolling.

FingerWorks products gained following among users seeking ergonomic alternatives to traditional mice and repetitive stress injury relief through gesture-based control. The company’s technology combined capacitive multi-touch sensing with sophisticated gesture recognition algorithms distinguishing intentional gestures from inadvertent touches.

In 2005, Apple Inc. acquired FingerWorks, hiring Elias and Westerman and obtaining their multi-touch patents and technology. This acquisition proved crucial to Apple’s development of iPhone and iPad multi-touch interfaces that would transform consumer expectations about touchscreen capabilities just two years later.

The Smartphone Revolution (2000s)

The 2000s brought touchscreen technology from specialized applications into mainstream consumer consciousness through mobile device innovation that fundamentally changed expectations about human-computer interaction.

Palm, Windows Mobile, and Stylus-Based Interfaces

Early 2000s smartphones and PDAs (personal digital assistants) predominantly used resistive touchscreens requiring stylus input. Palm devices running Palm OS, Pocket PCs running Windows Mobile, and early BlackBerry models all emphasized stylus-based interaction for handwriting recognition, precise target selection on small screens, and navigation through desktop-style interfaces miniaturized for handheld displays.

These devices found success in enterprise and professional markets but failed to achieve mass consumer adoption. Stylus-centric design created usability barriers—users needed to retrieve styluses before interaction, small styluses were easily lost, and interfaces optimized for stylus precision felt cumbersome for quick casual interaction many consumers preferred.

LG Prada: First Capacitive Touchscreen Phone

On December 12, 2006, LG Electronics announced the LG KE850 Prada, developed in collaboration with fashion house Prada. The Prada phone featured a large capacitive touchscreen designed for finger input without styluses, representing the first mass-produced mobile phone with capacitive touch technology. The device included a sophisticated user interface with animated transitions, finger-scrolling contacts and photo galleries, and touch-optimized icon-based navigation.

The LG Prada launched in select markets in May 2007 and won several design awards for its innovative interface approach. While successful within its luxury positioning, the Prada’s limited distribution and high price prevented the mainstream market impact its innovation merited. Historical significance was subsequently overshadowed by another capacitive touchscreen phone announced just weeks after the Prada.

iPhone and the Capacitive Multi-Touch Revolution

On January 9, 2007, Apple Inc. CEO Steve Jobs introduced the iPhone at the Macworld conference in San Francisco. Jobs’s presentation emphasized the iPhone’s revolutionary interface—a large multi-touch capacitive display designed explicitly for finger input without styluses. The demonstration showed pinch-to-zoom gestures in photos and web pages, inertial scrolling with momentum physics, and fluid animated transitions creating uniquely responsive tactile feel.

Jobs criticized existing smartphone interfaces as cluttered with permanent physical buttons serving single functions. The iPhone’s software-defined interface used screen area flexibly, presenting relevant controls contextually while hiding unnecessary options. This approach maximized usable screen area on handheld devices while keeping interfaces simpler than stylus-based competitors requiring scrolling through packed screens of tiny controls.

The iPhone launched June 29, 2007, and quickly achieved cultural phenomenon status despite premium pricing. Early adopters praised the intuitive multi-touch interface requiring minimal instruction. The capacitive touchscreen’s responsiveness far exceeded resistive alternatives—touches registered immediately, scrolling felt fluid and precise, and multi-touch gestures worked reliably without careful stylus positioning.

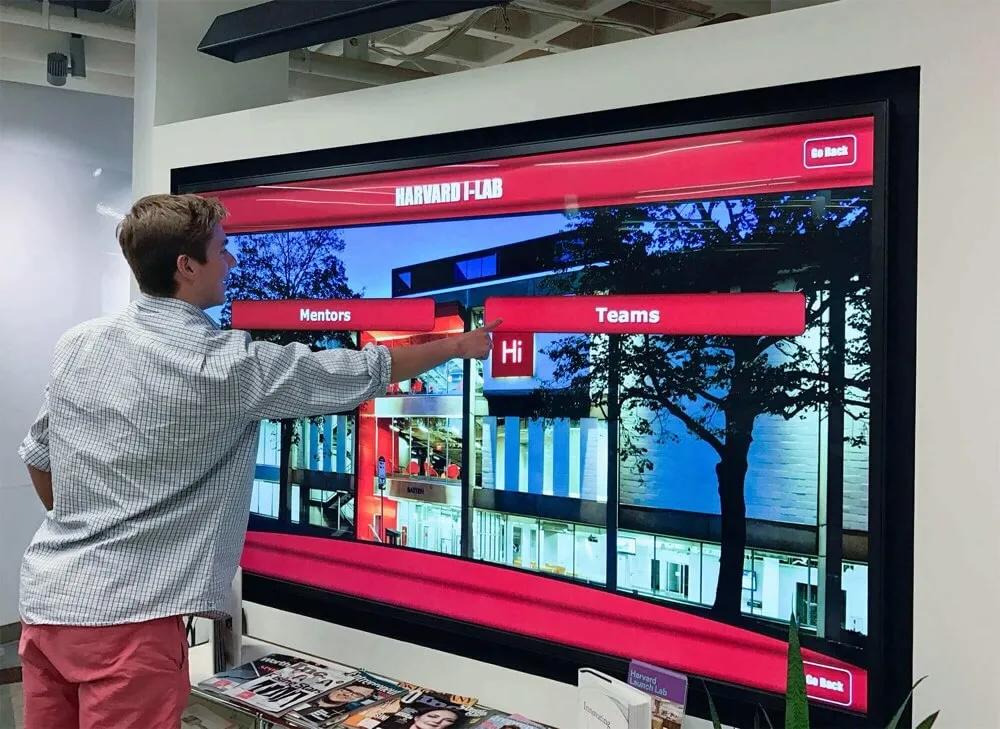

Smartphone-inspired gesture interfaces now define user expectations for all touchscreen applications including institutional displays

Perhaps most importantly, the iPhone established new baseline expectations for touchscreen interaction. After experiencing iPhone responsiveness and gesture sophistication, consumers found resistive stylus-based interfaces frustratingly primitive. This expectation shift affected not just competing smartphones but all touchscreen applications including ATMs, kiosks, automotive systems, and institutional displays—all of which increasingly adopted capacitive technology and gesture interactions matching smartphone conventions users now expected universally.

Android and Touchscreen Standardization

Google announced the Android operating system in November 2007, with the first Android device (HTC Dream/T-Mobile G1) launching September 2008. Android provided open-source smartphone software to hardware manufacturers, accelerating smartphone adoption across multiple price points and manufacturers.

Early Android devices used resistive touchscreens, but manufacturers quickly shifted to capacitive displays matching iPhone capabilities. By 2010, virtually all new smartphones featured capacitive multi-touch screens with gesture support, establishing these capabilities as standard features rather than premium differentiators.

The combined iPhone and Android success transformed the touchscreen industry. Annual capacitive touchscreen production increased from millions to billions of units, creating economies of scale reducing costs dramatically. Improved manufacturing quality, larger screen sizes, and better touch sensitivity all benefited from massive investment supporting smartphone demand. These improvements subsequently enabled practical large-format touchscreen displays for institutional applications at costs unthinkable just years earlier.

Modern Touchscreen Technology and Applications (2010s-Present)

The 2010s brought touchscreen technology maturity and ubiquity across consumer devices, industrial applications, and institutional environments including schools, museums, and corporate facilities.

Tablet Computing and the iPad

Apple launched the iPad tablet in April 2010, applying iPhone’s multi-touch interface to a larger 9.7-inch display. The iPad demonstrated that capacitive multi-touch worked effectively at larger sizes, validating tablets as distinct product categories beyond smartphones and laptop computers.

The iPad’s success prompted competing tablets running Android and Windows, establishing tablet computing as mainstream category. Tablets found particular success in education, healthcare, retail, and field service applications where larger screens than smartphones provided better content visibility while maintaining mobility impossible with laptop or desktop computers.

Large-Format Commercial Touchscreens

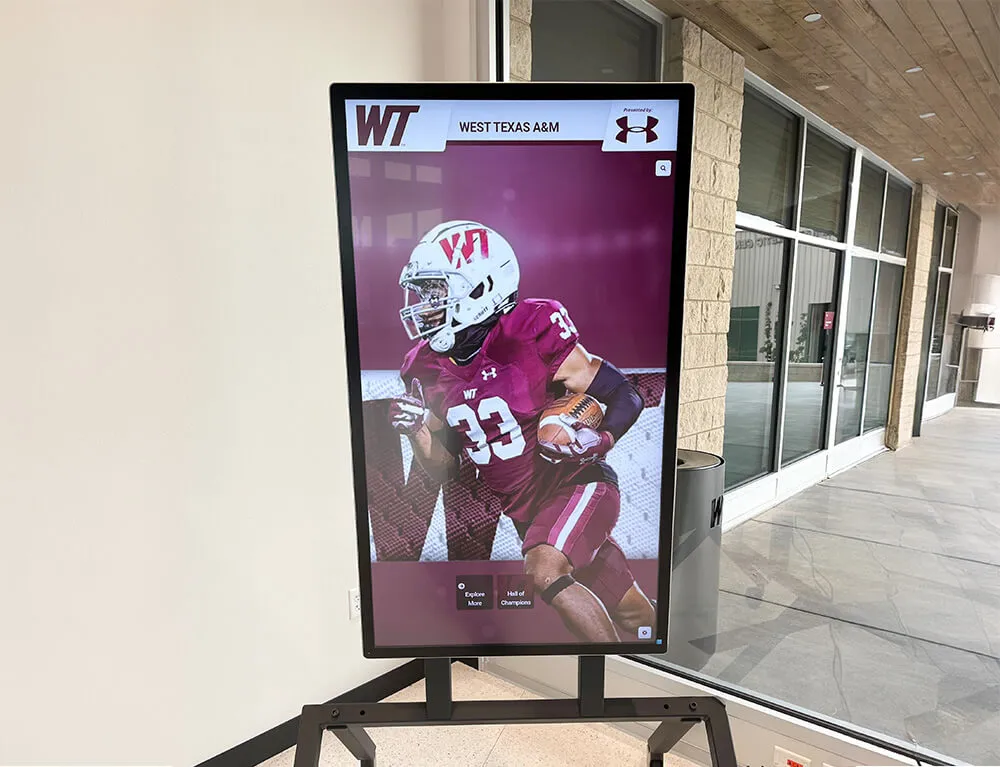

Smartphone and tablet success created market demand for large commercial touchscreens serving institutional, corporate, and public applications. Manufacturers developed commercial-grade capacitive touchscreens in sizes from 32 to 98 inches, rated for continuous operation in public environments.

These large-format displays enabled sophisticated applications including interactive wayfinding and building directories in corporate campuses and healthcare facilities, self-service check-in kiosks in hotels, airports, and hospitals, digital recognition displays celebrating achievements in schools and universities, museum exhibits and interpretive displays engaging visitors through interactive exploration, retail product information and configuration systems, and collaborative meeting room displays supporting team interaction.

Organizations implementing solutions like digital halls of fame using touchscreen technology found that modern capacitive displays delivered reliability and responsiveness meeting user expectations shaped by smartphone experiences. Unlike earlier resistive technology requiring calibration and showing degraded performance over time, commercial capacitive screens maintained accuracy and sensitivity across years of public use.

Advanced Touch Technologies and Innovations

Contemporary touchscreen development continues pushing capabilities beyond original implementations. Current innovations include:

In-Cell and On-Cell Touch Integration: Modern smartphone displays integrate touch sensors directly into LCD or OLED display structures rather than using separate touch overlay layers. This integration reduces display thickness, improves optical quality by eliminating air gaps between layers, and enhances touch sensitivity by positioning sensors closer to finger contact points.

Force-Sensitive Touch (3D Touch/Haptic Touch): Some devices measure touch pressure enabling interfaces distinguishing light taps from firm presses. Apple’s 3D Touch (now discontinued) and haptic feedback systems provide physical click sensations confirming touch registration without moving parts through precise vibration control.

Stylus Support with Palm Rejection: Sophisticated devices support both finger touch and precise stylus input using active digitizers detecting powered styluses while ignoring inadvertent palm contact during writing. Apple Pencil, Microsoft Surface Pen, and Wacom styluses enable professional creative applications on touchscreen devices.

Ultrasonic Fingerprint Sensing: Advanced smartphones incorporate ultrasonic fingerprint sensors beneath display surfaces, reading fingerprint details through screen glass using high-frequency sound waves. This technology enables secure biometric authentication without visible sensors or home buttons breaking screen aesthetics.

Commercial touchscreen installations now serve institutional recognition, information access, and community engagement across educational and organizational facilities

Touchscreen Technology Types and Comparison

Understanding different touchscreen technologies helps organizations select appropriate solutions for specific applications based on performance characteristics, durability requirements, and environmental conditions.

Capacitive Touchscreens

Capacitive technology, pioneered by E.A. Johnson in 1965, dominates modern touchscreen applications through superior performance characteristics aligned with contemporary user expectations.

Operating Principles: Capacitive screens use transparent electrode layers creating uniform electrostatic fields across display surfaces. Human fingers, being electrically conductive, distort these fields when touching screens. Controller circuits measure field disturbances, calculating precise touch coordinates. Projected capacitive technology (PCAP), the current standard implementation, uses electrode grids enabling multi-touch detection and exceptional touch precision.

Advantages: Capacitive screens offer excellent optical clarity with minimal light loss through sensor layers, superior durability with protective glass surfaces resisting scratches and impact, multi-touch capability supporting complex gestures, high touch sensitivity responding to light finger touches, and immunity to non-conductive contaminants like dust and water.

Limitations: Capacitive screens require conductive materials, preventing use with standard gloves or non-conductive styluses (though specialized conductive gloves and active styluses work effectively). Cold weather can reduce sensitivity as finger conductivity changes. Environmental factors like extreme moisture can create false touches.

Typical Applications: Smartphones, tablets, commercial kiosks, interactive displays, automotive touchscreens, and institutional applications including interactive recognition displays and digital signage.

Resistive Touchscreens

Resistive technology, developed by Dr. Sam Hurst in the 1970s, continues serving specialized applications despite declining market share relative to capacitive alternatives.

Operating Principles: Resistive screens use two flexible transparent layers separated by microscopic spacer dots. The inner surface of each layer has transparent conductive coating. When users press the top layer, it makes electrical contact with the bottom layer at touch points. Voltage measurements on both layers enable coordinate calculation.

Advantages: Resistive screens work with any input—fingers, gloved hands, styluses, or objects applying pressure. They operate reliably in harsh environments with extreme temperatures, humidity, or contaminants. Production costs remain lower than capacitive alternatives for smaller displays. Stylus input provides exceptional precision for detailed work.

Limitations: Resistive screens typically support only single-touch detection (though multi-touch resistive screens exist at higher costs). Flexible top layers scratch more easily than glass capacitive surfaces. Touch requires pressure rather than light contact. Optical clarity is lower due to multiple layers and air gaps. Response feels less immediate compared to capacitive sensitivity.

Typical Applications: Industrial control panels, medical devices where gloves are mandatory, outdoor applications with temperature extremes, budget point-of-sale terminals, and specialized stylus-dependent applications.

Infrared Touchscreens

Infrared technology, used in early PLATO educational systems and HP-150 computers, continues finding specialized applications leveraging its unique characteristics.

Operating Principles: LED emitters and photodetectors positioned around screen bezels create invisible infrared light grids across screen surfaces. When objects interrupt infrared beams, receiving photodetectors detect light blockage. Controller circuits determine touch coordinates by identifying which horizontal and vertical beams are interrupted.

Advantages: Infrared systems work with any object—fingers, gloved hands, styluses, or pointing devices. No overlay layers affect display quality, providing perfect optical clarity. Extremely durable with no screen surface sensors to wear out. Supports multi-touch when sufficient beam density enables detecting multiple simultaneous interruptions.

Limitations: Beam interruption from any object means infrared screens can’t distinguish intentional touches from inadvertent contact or foreign objects. Bright infrared light (sunlight) can interfere with detection. Accumulated dust or debris on bezel sensors requires regular cleaning. Bezels must accommodate emitters and detectors, increasing frame size around active screen areas.

Typical Applications: Large-format displays where durability matters more than precision, outdoor kiosks requiring glove operation, industrial environments with harsh conditions, and educational applications requiring low maintenance.

Surface Acoustic Wave (SAW) Touchscreens

SAW technology offers excellent optical clarity and surface durability for applications where these characteristics outweigh environmental sensitivity limitations.

Operating Principles: Ultrasonic transducers at screen corners generate acoustic waves distributed across glass surfaces by reflector patterns. Receiving transducers detect acoustic reflections. When users touch screens, fingers absorb acoustic energy at contact points. Controller circuits calculate touch coordinates by analyzing acoustic disruption patterns.

Advantages: SAW screens provide exceptional optical clarity since only glass surfaces cover displays without coatings or overlays. Glass surfaces prove highly durable and resistant to scratches. The technology supports sophisticated tracking including tracking approaching fingers before actual touch for hover interactions.

Limitations: Surface contaminants like liquids, dust, or adhering materials can block acoustic wave propagation creating false touches or dead zones. This environmental sensitivity limits deployments to relatively clean indoor environments. Screens require periodic cleaning to maintain performance.

Typical Applications: Indoor kiosks in controlled environments, ATMs in climate-controlled lobbies, point-of-sale terminals in clean retail environments, and information displays where optical clarity is paramount.

Impact on User Experience and Interaction Design

Touchscreen technology fundamentally transformed interface design principles and user expectations about digital interaction across all application domains.

Direct Manipulation and Reduced Cognitive Load

Touchscreens enable direct manipulation—users physically touch objects they want to interact with rather than operating through indirect input devices like mice. This directness creates more intuitive interaction aligned with physical world expectations about cause and effect.

Research consistently demonstrates that direct manipulation reduces cognitive load compared to indirect interfaces. Users don’t maintain separate mental models of cursor position versus target location. The reduced abstraction makes interfaces more accessible to users with limited technical experience, elderly populations, and children still developing abstract reasoning capabilities.

Educational institutions implementing touchscreen software for student-facing applications report that direct manipulation enables independent use without training or assistance—students intuitively understand that touching desired content accesses it, requiring no instruction about mouse cursor operation or keyboard navigation that indirect interfaces demand.

Gesture-Based Interaction Languages

Multi-touch technology created new interaction vocabularies using natural gestures rather than learned button or menu commands. Pinch gestures zoom intuitively—fingers moving apart enlarge content while fingers moving together shrink content, directly corresponding to physical analogies of bringing objects closer or pushing them away. Swipe gestures scroll naturally through content with inertial physics matching physical page-turning or paper-scrolling behaviors.

These gesture languages feel intuitive because they leverage physical world understanding rather than requiring memorization of abstract command structures. Users quickly master gesture interactions through brief experimentation, while keyboard shortcuts and menu hierarchies demand explicit instruction and practice.

However, gesture discoverability remains challenging—users may not know what gestures applications support without explicit instruction or visual hints. Effective touchscreen experience design balances leveraging standard gestures users expect from smartphone experience while providing clear affordances showing available interaction possibilities.

Accessibility Considerations and Universal Design

While touchscreens improve accessibility for many users by eliminating indirect pointing devices requiring fine motor control, they present challenges for users with certain disabilities.

Vision-impaired users struggle with touchscreens lacking tactile feedback indicating button boundaries and positions. Unlike physical keyboards providing tactile landmarks, flat touchscreens offer no physical reference points enabling non-visual navigation. Screen reader software helps, but touch interaction remains more challenging than keyboard alternatives for blind users.

Users with motor control challenges, tremors, or limited precision may accidentally trigger incorrect touch targets or struggle with gesture timing requirements. Inclusive institutional touchscreen implementations accommodate varied abilities through generous touch targets exceeding minimum size recommendations, forgiving interface designs allowing easy error correction, and alternative interaction modes supporting varied user capabilities.

Modern Institutional Applications

Contemporary touchscreen technology serves diverse institutional purposes across educational, cultural, corporate, and public facilities with applications extending far beyond smartphones and tablets.

Educational Institution Deployments

Schools, colleges, and universities implement large-format touchscreens for varied purposes supporting institutional missions and student engagement.

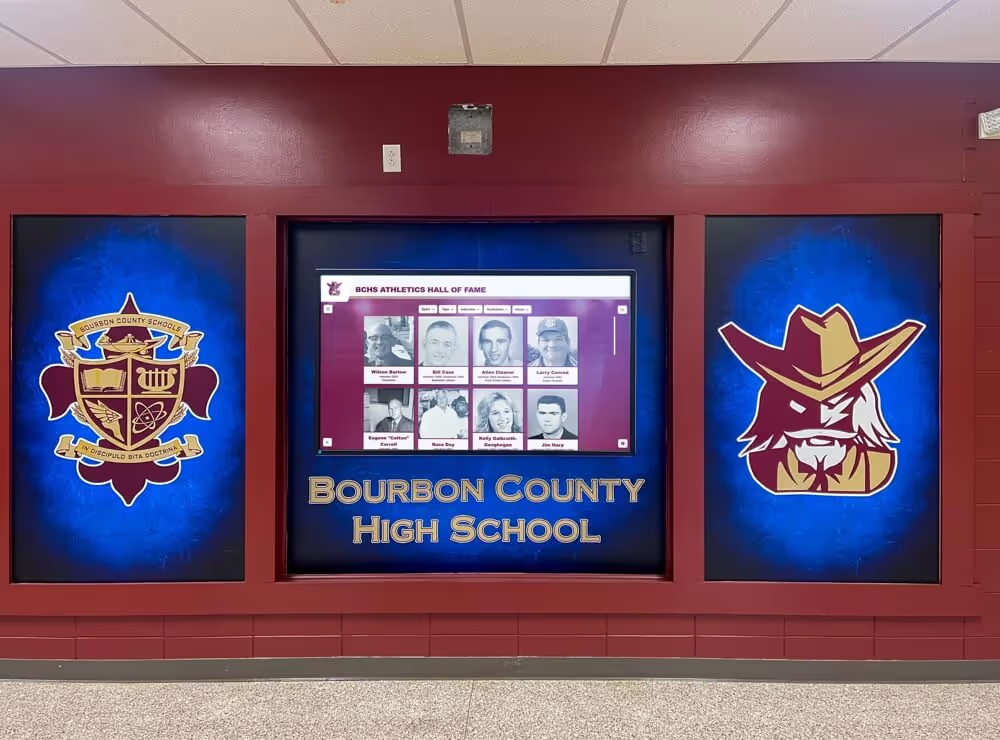

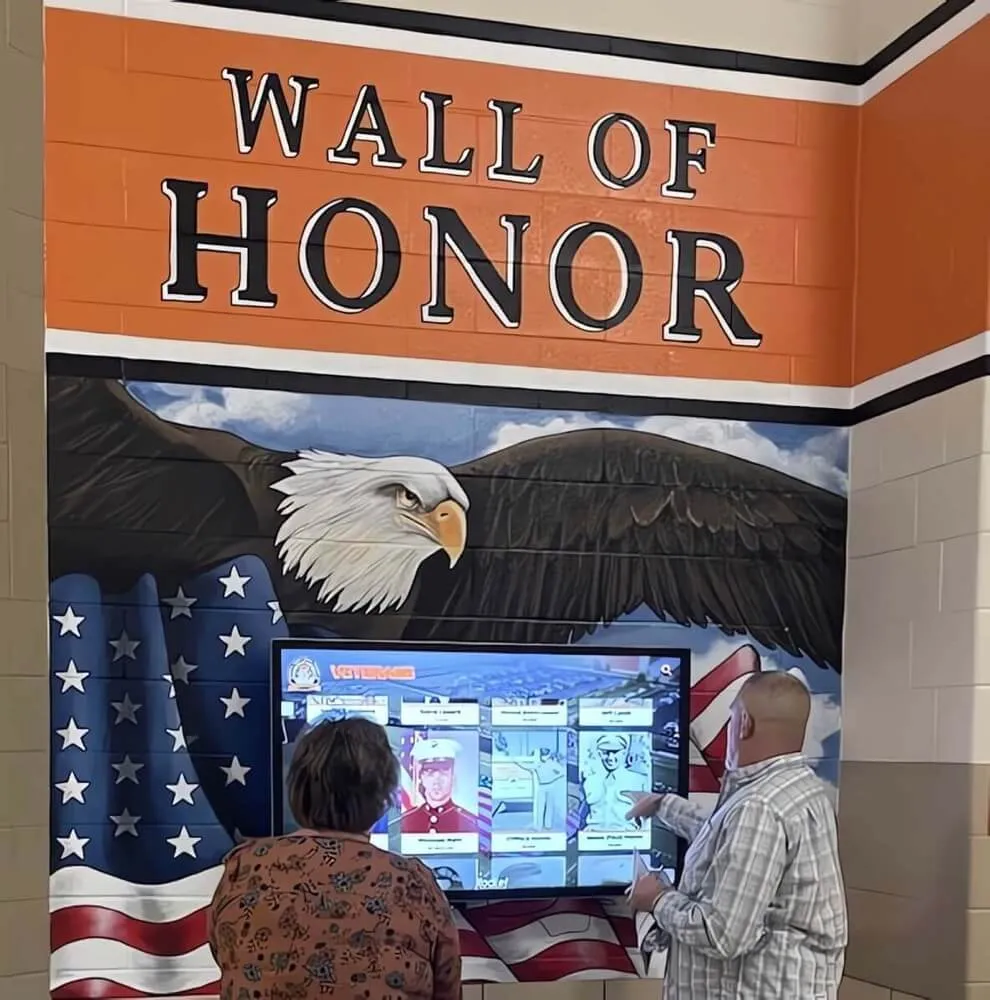

Digital Recognition and Hall of Fame Displays: Interactive touchscreen displays celebrate student achievements, alumni accomplishments, and institutional history through searchable databases with rich multimedia profiles. Unlike traditional static plaques limited by physical space, digital recognition accommodates unlimited honorees with comprehensive storytelling including photos, videos, and detailed biographical content.

Organizations implementing solutions like Rocket Alumni Solutions find that touchscreen recognition displays generate substantially higher engagement than static alternatives. Visitors spend several minutes exploring interactive content compared to seconds glancing at traditional plaques. Search functionality enables instant location of specific individuals, while browse modes support discovery of interesting stories and unexpected connections within institutional heritage.

Wayfinding and Campus Information: Large campus facilities benefit from touchscreen wayfinding kiosks helping visitors, prospective students, and even current students navigate complex building layouts, locate specific offices or classrooms, identify dining and amenity locations, and access event calendars and campus news.

Interactive Learning and Exhibits: Museum-style educational exhibits using touchscreen interaction engage students through exploratory learning experiences where they control investigation pace and follow curiosity-driven paths through content. Science demonstrations, historical timelines, and subject-matter deep-dives become more engaging through interactive presentation compared to passive static displays.

School hallway installations position touchscreen displays where students naturally encounter institutional recognition and information throughout daily activities

Museum and Cultural Institution Applications

Museums, historical societies, visitor centers, and cultural institutions leverage touchscreen technology for interpretive exhibits and collection exploration.

Interactive exhibits let visitors explore artifact details beyond physical label limitations, access multimedia content including curator commentary and historical context, navigate complex timelines and relationships between exhibits, and customize exploration based on personal interests rather than following predetermined paths.

Touchscreen interfaces accommodate varied visitor preferences—some people prefer reading detailed text, others respond to visual content, still others engage most effectively with video presentations. Multi-modal touchscreen content serves diverse learning styles within single installations more effectively than any static medium.

Corporate and Healthcare Facilities

Corporate offices, healthcare facilities, and hospitality venues deploy touchscreens for wayfinding, directories, donor recognition, and self-service applications including visitor check-in and registration, employee directories and department locations, donor walls celebrating philanthropic support, service kiosks for appointment scheduling and information access, and meeting room booking and resource reservation.

These applications reduce reception desk workload while providing 24/7 self-service access extending beyond staffed hours. The familiar smartphone-like interfaces require minimal instruction, enabling immediate productivity for visitors unfamiliar with specific facilities or organizations.

Future Directions and Emerging Technologies

Touchscreen technology continues evolving with several emerging developments promising enhanced capabilities and new application possibilities.

Flexible and Foldable Displays

Flexible OLED displays combined with flexible touch sensors enable foldable smartphones and future applications including wearable displays conforming to curved surfaces, rollable displays for portable large-screen devices, and conformable touchscreens for automotive interiors and aerospace applications with non-planar mounting surfaces.

Samsung, Motorola, and other manufacturers already ship foldable smartphones featuring flexible capacitive touchscreens maintaining full functionality across repeated folding cycles. While current implementations remain expensive and show durability limitations, ongoing development promises more robust flexible touchscreen technology enabling novel form factors impossible with rigid glass displays.

Haptic Feedback and Tactile Touchscreens

Advanced haptic feedback systems provide tactile sensations simulating button clicks, texture variations, and physical detents helping users navigate interfaces through touch feel. Current implementations use vibration motors creating localized feedback, but emerging ultrasonic haptic technology promises more sophisticated tactile sensations.

Tactile feedback addresses touchscreen accessibility limitations by providing physical confirmation of touch registration and button boundaries without requiring vision. Enhanced haptics could enable more confident interaction for elderly users, people with low vision, and situations where visual attention must focus elsewhere like automotive applications requiring eyes-on-road operation.

Touchless Gesture Recognition

Computer vision systems detecting hand gestures without physical screen contact gained interest during COVID-19 pandemic concerns about shared surface transmission. While purely touchless interaction faces discoverability challenges—users don’t know what gestures systems recognize—hybrid approaches combining touch and touchless options provide flexibility for users preferring either interaction mode.

Automotive applications particularly benefit from touchless gesture recognition enabling driver control adjustments without physical contact requiring precise targeting difficult while operating vehicles. Gesture control for volume, climate, and information system functions reduces distraction compared to locating and pressing touchscreen targets.

AI and Personalization

Artificial intelligence integration promises adaptive touchscreen interfaces adjusting to individual users and usage contexts. Potential applications include personalized content recommendations based on interaction history, interface layouts optimizing for detected user preferences, accessibility accommodations automatically activated based on detected user needs, and predictive interfaces surfacing likely next actions reducing required interactions.

While AI personalization raises privacy considerations requiring careful implementation, thoughtful applications could substantially improve user experience and efficiency through reduced cognitive load and streamlined workflows adapting to how individuals actually use systems rather than forcing standard interfaces on diverse user populations.

Selecting Touchscreen Solutions for Institutional Applications

Organizations implementing touchscreen installations for recognition, wayfinding, or information access benefit from systematic evaluation ensuring technology selections match specific application requirements and organizational contexts.

Requirements Assessment

Before technology selection, institutions should clearly define objectives—what should touchscreen installations accomplish? Common goals include celebrating achievements and institutional heritage, simplifying campus navigation for visitors, providing self-service information access, engaging communities through interactive storytelling, and reducing staff workload through automated information provision.

Understanding primary users and contexts informs appropriate design decisions. Student-facing applications need simple interfaces supporting quick casual use between classes. Visitor-focused applications require exceptionally clear wayfinding requiring no instructions. Alumni and community displays benefit from deep content supporting extended exploration of institutional heritage.

Technology and Platform Selection

Not all touchscreen solutions deliver equal results for institutional applications. Organizations should evaluate purpose-built platforms designed specifically for institutional recognition and information access, commercial-grade hardware rated for continuous operation in public environments, intuitive content management systems enabling non-technical staff to maintain displays, comprehensive vendor support including training and ongoing assistance, and proven experience with similar organizational types and applications.

Critical evaluation criteria include ease of content updates without technical expertise requirements, search and filtering functionality enabling efficient content discovery, unlimited capacity accommodating comprehensive content without artificial limitations, multimedia support for photos, videos, and varied content types, web accessibility extending content beyond physical displays, and analytics tracking engagement demonstrating installation value to stakeholders.

Organizations implementing recognition displays should prioritize solutions designed specifically for celebrating achievements rather than generic digital signage platforms repurposed inadequately. Purpose-built platforms like Rocket Alumni Solutions provide recognition-optimized features including honoree profile structures, search by name and achievement category, and institutional branding integration impossible with general-purpose alternatives.

Installation and Ongoing Support

Professional installation ensures reliable operation through proper mounting supporting display weight safely, adequate electrical power and network connectivity, appropriate height and positioning for accessibility and viewing comfort, protection from environmental factors like direct sunlight causing screen glare, and clean cable management maintaining professional appearance.

Organizations should verify total cost of ownership including initial hardware and software investment, recurring licensing fees for cloud-based platforms, support contract costs for technical assistance, and planned upgrade cycles ensuring technology remains current as capabilities evolve and user expectations change based on latest consumer device experiences.

Conclusion: From Experimental Technology to Essential Interface

The history of touchscreen technology represents one of computing’s most transformative evolutions. What began as specialized air traffic control interfaces developed by E.A. Johnson in 1965 gradually expanded through decades of refinement into the dominant interaction paradigm through which billions of people worldwide now access digital information, navigate applications, and engage with institutional recognition displays.

The journey from single-touch resistive screens requiring stylus precision to responsive multi-touch capacitive displays supporting sophisticated gestures fundamentally changed expectations about human-computer interaction. Direct manipulation replaced indirect pointing devices for many applications. Intuitive gesture languages reduced learning curves that once made computers intimidating for non-technical users. And touch-optimized interfaces enabled entirely new application categories impossible with keyboard and mouse alternatives.

For educational institutions, cultural organizations, and public facilities, modern touchscreen technology provides powerful tools for achieving institutional missions. Digital recognition displays celebrate achievements comprehensively while engaging communities through interactive storytelling. Wayfinding applications simplify navigation through complex facilities. Information kiosks provide self-service access reducing staff workload. And interpretive exhibits create exploratory learning experiences impossible with static displays.

As touchscreen technology continues evolving through flexible displays, enhanced haptic feedback, gesture recognition, and AI personalization, new possibilities emerge for creating even more intuitive, accessible, and engaging interactive experiences. Organizations implementing touchscreen solutions today benefit from decades of development that transformed experimental concepts into reliable, cost-effective platforms delivering measurable value through improved engagement, enhanced communication effectiveness, and strengthened connections between institutions and the communities they serve.

Ready to explore modern touchscreen applications for your institution? Discover how to design engaging touchscreen experiences, explore comprehensive touchscreen software solutions for institutional applications, and learn about implementing interactive touchscreen displays for school recognition programs that leverage touchscreen technology’s complete evolution from 1960s experimental interfaces to today’s sophisticated platforms celebrating achievement and engaging modern audiences.